Intercept AI Policy

Purpose

At Intercept, we use AI responsibly and transparently. Our approach is human-led and AI-supported. This document outlines Intercept’s current practices for AI usage, tool governance, and risk management. It is intended to clarify procedures Intercept takes and principles we follow to ensure that AI is used responsibly and in accordance with the interests of our clients.

Guiding principles

Intercept’s use of AI is governed by a set of principles that ensure the technology enhances the quality and integrity of our work:

Human-led

AI is used to assist, not replace, our teams. Strategic and creative direction and final outputs are always led and validated by Intercept personnel. AI-generated content is reviewed by team members prior to delivery.

Commitment to quality

AI is used to increase efficiency and expand creative possibilities. Accuracy, clarity, and brand consistency remain the responsibility of Intercept at all times, regardless of tool involvement.

Accountability and oversight

All client deliverables, including those involving AI-assisted workflows, remain the responsibility of Intercept. AI outputs are verified by our personnel for accuracy and compliance prior to delivery.

Transparency

We disclose the use of AI in client work when it meaningfully contributes to the final output. Levels of disclosure are based on the degree of AI involvement (see Tool classification and Disclosure).

Bias awareness and inclusion

We work to reduce unintended bias in outputs, by carefully curating AI tools, and designing our prompts.

Governance: The Spark Council

AI usage at Intercept is governed by the Spark Council, a cross-functional body responsible for AI strategy, oversight, and enablement. The council includes representatives from operations, strategy, content, creative, and account departments, and is accountable for ensuring that AI tools and practices align with both internal policies and client expectations.

The Spark Council is responsible for:

- Evaluating and approving all AI tools before they are deployed for agency-wide use

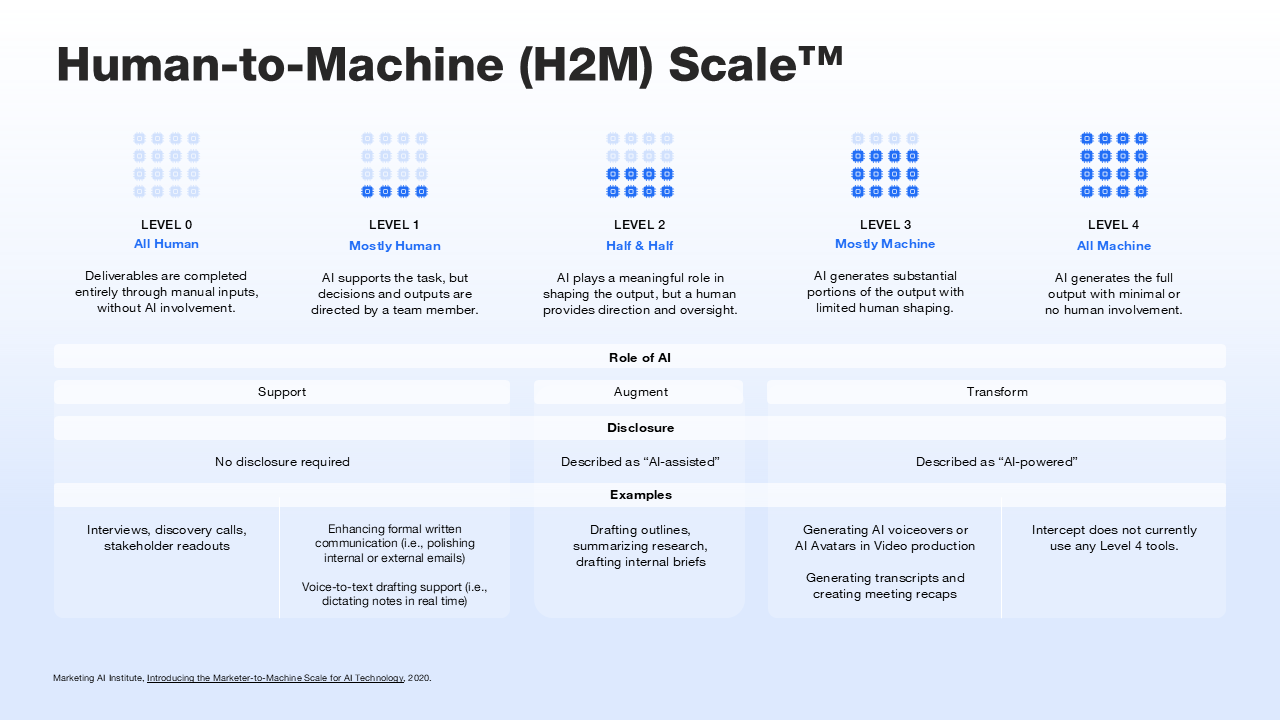

- Classifying tools using the Human-to-Machine (H2M) Scale™ developed by the Marketing AI Institute

- Updating this AI policy on a quarterly basis

- Supporting employee training through onboarding materials and approved use case guidance

AI tool evaluation process

Before any AI tool is approved for use at Intercept, it must pass a structured, multi-step review process:

- Initial screening: Rapid scan for disqualifying risks, including unauthorized training on user data, lack of data isolation, or major security gaps

- Checklist review: Detailed evaluation of security practices, privacy controls, intellectual property handling, and operational fit

- Pilot testing: Limited rollout in a real-world setting to assess usability, performance, and risk before broader adoption

All tools are reviewed across four key dimensions: privacy, security, intellectual property, and strategic fit.

AI usage guidelines

Intercept permits the use of AI tools under defined conditions that promote quality and ensure data protection.

Approved uses

The following AI-assisted activities are permitted when using tools that have been vetted and approved by Intercept. In all cases, accuracy review, and final accountability remain with Intercept personnel.

- Brainstorming and concept generation

- Summarization of meeting notes, research, or transcripts

- Drafting content outlines, messaging frameworks, or structuring content

- Copywriting and initial content drafting (AI involvement disclosed in scope of work)

- Repurposing approved content into new formats or channels (e.g., blog to social copy), with disclosure when AI meaningfully contributes to the final output

Conditional uses

The following use cases may proceed with additional review, oversight, or disclosure requirements:

- Drafting of client-facing materials (e.g., emails), which must be reviewed and finalized by a designated team member

- AI-supported contributions to strategic planning or scenario modeling, with human-led direction and validation

- Generating portions of outputs, where deliverables may be externally published or require contract-level transparency

Prohibited uses

The following uses of AI are strictly prohibited:

- Using an AI tool for client work that is explicitly prohibited under the client’s AI policy as communicated to us, or under contractual agreement. In the event of a conflict between Intercept’s internal AI policy and client’s policy and contractual terms, the latter takes precedence over the former.

- Inputting personally identifiable information (PII), highly confidential or classified client data, or sensitive materials into any AI system.

- Allowing client-confidential information to be used in ways that could train or inform external AI platforms (see “Use of client content in AI platforms” section).

- Using unapproved or unreviewed AI tools at any stage of deliverable development, including drafting, editing, or final client deliverable.

- Using AI to develop proprietary intellectual property, strategic frameworks, or thought leadership without documented leadership approval.

- Bypassing agency vetting processes by using non-approved browser extensions or personal AI accounts.

- Using agentic AI tools, defined as systems capable of taking autonomous actions such as booking travel, executing transactions, or integrating with calendars, email, or payment systems. These tools are strictly prohibited on any device used for internal Intercept operations or for client-facing work, unless and until they have been formally reviewed and approved by the Spark Council.

- Use of any AI tool that has not been formally vetted and approved by the Spark Council.

- Using AI in ways that are illegal, discriminatory, deceptive, or harmful, including impersonating individuals, generating misleading content presented as factual, or producing content that violates applicable laws or regulations.

Use of client content in AI platforms

Intercept does not permit the use of confidential or proprietary client information in any AI platform where such data may be retained, accessed, or used to train underlying models.

To that end, all third-party AI tools used by Intercept undergo a formal evaluation process by the Spark Council to:

- Ensure client inputs are not used to train or improve public AI models, unless the content is already publicly available and non-confidential

- Configure platform settings to prevent data retention or reuse wherever supported

- Prohibit entry of unpublished or sensitive client material into tools that do not offer explicit data isolation guarantees

This policy applies to any non-public or proprietary content. Information that is already published, such as website copy, press releases, or public presentations may be used where appropriate, provided it does not breach confidentiality or contractual terms.

Tool classification and disclosure

Intercept classifies AI tools based on the level of machine autonomy involved in their use.

To do this, Intercept uses the H2M (Human to Machine) Scale™ developed by the Marketing AI Institute. This industry-recognized framework categorizes tools from Level 0 (fully human-driven) to Level 4 (fully autonomous). The higher the level of machine involvement, the greater the scrutiny, validation, and transparency required.

The Spark Council applies the H2M Scale™ to:

- Assess how each tool is used in practice

- Determine the level of internal oversight or quality review

- Establish the appropriate level of disclosure of AI involvement to our clients —the greater the role AI plays in shaping the output, the higher the requirement for visibility in clients documentation

Disaster recovery and redundancies

In the event that an AI platform becomes unavailable, Intercept maintains protocols to ensure continuity of delivery without delay or compromise.

We have documented standard operating procedures (SOPs) for all core workflows, enabling teams to revert to fully manual processes when necessary, or to transition to a vetted alternative without impacting project timelines or deliverable quality.

Policy administration:

This policy is reviewed on a quarterly basis, by the Spark Council. For any questions regarding this policy, please reach out to: [email protected]